Real or fake? A research team from Erlangen has figured out how to reliably spot deepfakes.

Published on January 27, 2026

Deepfakes — meaning AI-generated images or videos — are getting so realistic, it’s almost impossible to spot them with the naked eye. New deepfake tools are popping up constantly, but a lot of detection systems can’t keep up. That’s why Sandra Bergmann, a PhD student at Friedrich-Alexander University in Erlangen-Nuremberg (FAU), is working on ways to automatically and reliably detect synthetic images. Together with Professor Christian Riess and the cybersecurity company secunet (Security Networks AG), she built a prototype called VeriTrue as part of the SPRIN-D 'Deepfake Detection and Prevention' Challenge — and it made it to the finals.

Picture: © FAU / Giulia Iannicelli

Picture: © FAU / Giulia Iannicelli

Research between real and fake

Sandra Bergmann’s research tackles one of the most delicate areas in today’s AI development: figuring out how to tell synthetic images apart from real ones — even when those real images have been altered using AI-based compression.

A key part of her PhD work focuses on JPEG AI, a new image compression standard powered by neural networks. This type of compression leaves traces that can look a lot like those left by AI-generated images. And that’s exactly what her forensic analysis dives into.

“It’s basically a compression method that uses neural networks,” she explains. “It leaves behind similar traces to those you’d find in AI-generated images."

Not every image that shows signs of AI is automatically a deepfake. AI-compressed images start out as real photos — they’ve just been processed by neural networks, not created from scratch. Her research aims to reliably tell those apart.

Another big focus of her work? Spotting deepfakes in real-world situations. Over the course of a three-year project with Nürnberger Versicherung (a German insurance company), Sandra worked on detecting manipulated damage claim photos. One eye-opening moment: realizing just how fast and easy it is to create a convincing fake image with today’s tools.

Deepfakes as a social challenge

For Sandra, one thing’s clear: deepfakes aren’t just a tech issue — they’re a real threat to society. It’s easier than ever to create convincing fakes, and social media spreads them at lightning speed.

“We live in a time where social media is totally normal, and stuff gets shared so fast — people don’t even stop to ask if it’s real or fake.”

And it’s not just about political misinformation. Deepfakes can be used for economic fraud too — like fake photos used as evidence. The fact that AI-generated images are getting more and more convincing makes it even harder to tell what’s real.

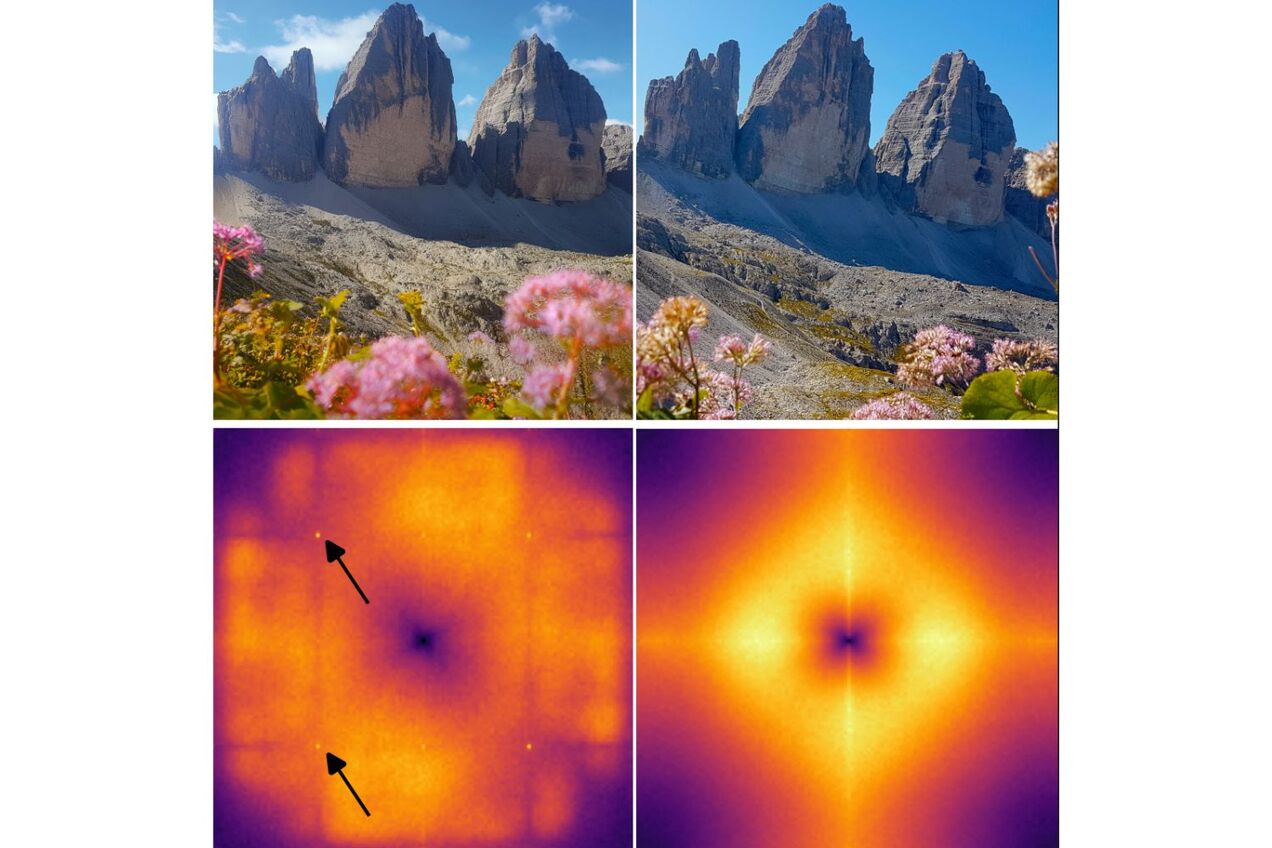

To show that there are solid technical clues for spotting AI-generated images, Sandra shares a clear example: a fake AI image of the Drei Zinnen (on the left) compared to a real vacation photo (on the right).

The key difference? The frequency spectrum. The AI image (made with Midjourney V7) shows regular peaks — a typical artifact of many generative models. Real photos don’t have those patterns at all.

You can’t see those artifacts with your eyes — but image forensics can. And that’s what makes these tools so powerful: they provide technical proof, even when your eyes can’t tell the difference. That’s why it’s more important than ever not to just trust what you see, but to verify images using tech — or, in daily life: check the source, understand the context, and don’t believe everything at first glance.

Until the finale of SPRIND-D Challenge

As part of the nationwide innovation challenge “Deepfake Detection and Prevention” by SPRIN-D, Sandra teamed up with secunet Security Networks AG and the Friedrich-Alexander University Erlangen-Nuremberg (FAU) to develop a prototype.

Instead of relying on a single classifier, the tool combines multiple detectors — each designed to pick up on different aspects of deepfakes. Some are trained to spot patterns typical of known AI image generators, while others were intentionally trained without prior deepfake knowledge. Why? To catch outliers — like images from new or unknown generators.

“We use a mix of detectors that specialize in different things — some know lots of deepfakes, others none at all. That way, we can even catch new generators. And we connect all those detectors smartly to make a final decision.”

This setup makes it possible to catch new types of deepfakes — even if the system has never seen anything like them before. That makes it great for sifting through huge batches of images without needing to check each one manually — a real win for agencies or insurance companies.

The approach got the team to the final round of the SPRIN-D Challenge. And while they didn’t take home the top prize, it was still a success: the first prototype is ready!

Sandra Bergmann studied medical engineering and electronic/mechatronic systems at the Nuremberg Institute of Technology (Georg Simon Ohm). Since 2021, she’s been a PhD student in the Multimedia Security research group at the Chair of IT Security Infrastructures at FAU Erlangen-Nuremberg.

Her research focuses on forensic analysis of AI-compressed and AI-generated images. As part of her doctoral work, she spent three years working on a project with Nürnberger Versicherung, developing tools to detect image-based fraud.

Most recently, she took part in the SPRIN-D Funke “Deepfake Detection and Prevention” challenge, where she and a team from secunet Security Networks AG built a prototype for robust deepfake detection.

Alina Laßen

Werkstudentin Marketing & Projektmanagement

NUEDIGITAL

Related Blog Posts

.png?locale=en)

Feminist AI: Why AI Is a Question of Power and Control

Feminist AI is not a singular tool but a shift in perspective, aimed at shaping the power structures, design processes, and applications of AI in a more equitable manner.

Published on February 19, 2026

Read more